19:54:50 | INFO sync.util :: STARTING: Sync metadata for my-custom-postgres-driver Database 3 'my_test_database' v0.35.4, fresh Metabase db, custom test data warehouse (Postgres). Note that each sync is a manually triggered sync ( api/database/:id/sync_schema), not the one that runs automatically straight after adding a database. During sync, Metabase uses ~50% of a CPU with spikes between 100% and 150%.Īre these results reproducible on your end? So a 2x slow-down in sync-fields would snowball into a big resource usage impact. Each database typically has 50 to 100 tables, and total 2k or more fields across those tables. Instances typically have 2 to 8 or sometimes ~50 databases.

This work occurs every hour or less, and as part of that process a database sync is requested. The application does things like setting up new databases, maintaining the data reference, creating template / starter questions, etc. In my situation, there is an external application managing the Metabase configuration via the Metabase API. There were a lot of changes between v0.35.4 and v0.39.2 so I'm not sure where the issue came from. I haven't copied the logs but results were similar between v0.39.1 and v0.39.2. It seems that somewhere between v0.35.4 and v0.39.2, that sync-fields step takes twice as long with the same data warehouses. Would it be feasible to separate the limit by database? So an instance with 20 databases isn't limited to 1 table show/hide at a time, but can do 20.Īnyway, I did some further testing and I think this might instead be related to the Metabase database sync step sync-fields. So then perhaps the reproduce this issue involves running multiple concurrent database syncs, and then trying to use the UI / call the I was tailing the postgres logs with log_connections = on and the hide/un-hide action was associated with a new connection each time.

#Metabase kubernetes code#

Maybe the wider performance impact across the app is due to other parts of the code waiting on sync activity to complete. In other words, a bottleneck for any sync activity across the instance. It seems that in #15916 there was a single thread threadpool added for table metadata updates. Given the reports of better performance in v0.39.1, I had a look at the changes between versions. For example select * from metabase_database in 9ms. The back-end databases are functioning normally watching incoming Metabase queries with pg_stat_activity then running them manually, the queries execute in sub-second times. For example, 5 seconds for /api/current (loaded every page), or 30 seconds to get /api/databases (the Admin -> Databases page). Usually that is fine, but with v0.39.2 the instance slows to a crawl and the Metabase UI is unusable. The instance has 20+ Metabase databases, which are synced hourly or so.

Using CentOS 7 with Postgres 10 back-end and warehouse. It's annoying - we have to restart metabase very often as a quick fixĪdditional I also have poor performance on Metabase v0.39.2. "java.vm.name": "OpenJDK 64-Bit Server VM", We use Google auth for user authentication.As a metabase DB we use AWS RDS Postgresql.We run metabase in k8s cluster in AWS, with using HELM chart.Information about your Metabase Installation:

We do not see any spike in CPU load nor in RAM usage during the time of slow metabase response

#Metabase kubernetes upgrade#

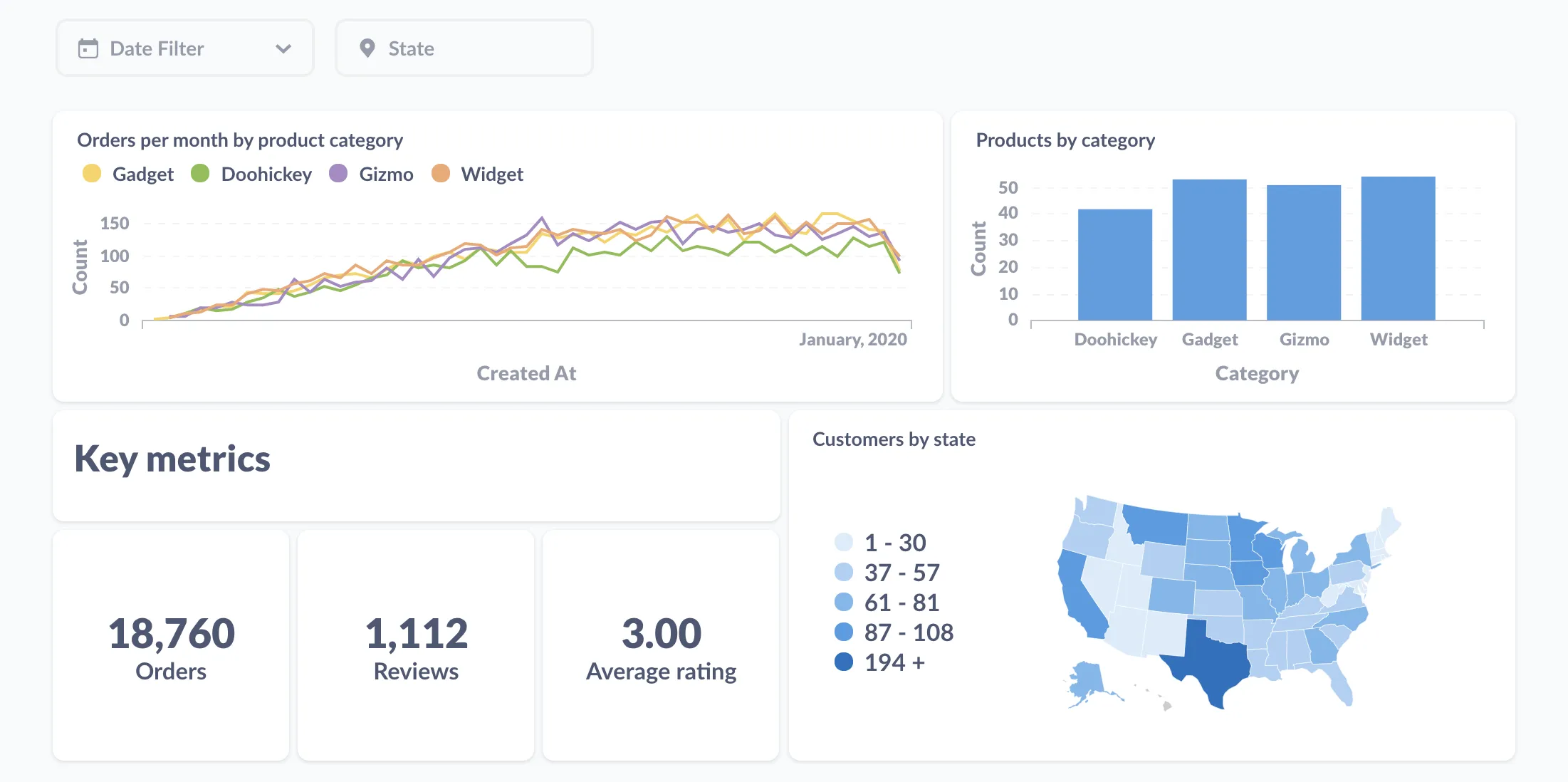

This is graph from datadog for past month.we did upgrade on 11th of Jun

0 kommentar(er)

0 kommentar(er)